Karen Titus

August 2022—Just as there is scant room in this world for pink “While You Were Out” notepads, paper checks, or the copying skills of Bartleby the Scrivener, laboratories would do well to leave manual result verification in the past.

But the need for such verification persists, with labs looking for ways to bring processes in line with automated analyzers and high-throughput testing, said Mayo Clinic’s Darci Block, PhD, DABCC, who talked about her experiences in a presentation at the Pathology Informatics Summit 2022 in May. It’s even more critical given the ongoing labor shortage in laboratories.

Result verification had become tedious and time-consuming when manual methods were applied in speeded-up laboratories. “And given the mundane nature of it, led to operator fatigue and potential for errors, and ultimately rendered itself impractical to do in this manner,” said Dr. Block, co-director of Mayo’s central clinical laboratory. In short, this older approach to the task has fallen into “I would prefer not to” territory.

Given the importance of result verification (a last opportunity to identify errors, a chance to prevent reporting inaccurate results, and ensuring compliance), labs would do well to ask if autoverification makes sense, Dr. Block said. The relevant CLSI guideline (“Auto15: Autoverification of Medical Laboratory Results for Specific Disciplines,” 1st ed., 2019) can help labs decide when it’s needed, including when (ahem) there’s a shortage of laboratory technologists, for compliance with quality requirements, and to meet turnaround time ex-

pectations.

Dr. Block

Autoverification is, at its heart, a tale of filters. Raw results from an instrument are sent through middleware software that basically replicates the manual work done by a technologist, comparing results against laboratory-defined acceptance parameters for a number of filters, such as quality control within allowable limits, absence of instrument flags (or similar errors), within allowable HIL limits (is the sample too hemolyzed, icteric, or lipemic?), and limit checks (is the result within the analytical measurement range? is dilution required? is it questionably low?).

As with all rules, they spring from the needs of the users, which can vary from one lab to the next. At Mayo Clinic, Dr. Block noted, “We do like when these rules hold things that are actionable. So it might mean that you have to pour the sample over into a false bottom tube, check for a clot, or repeat the testing with increasing or decreasing volume,” to name a few examples. Other commonly used rules address critical values and delta checks. (The latter may be of more historical significance to help identify misidentified samples that labs continue to evaluate in the advent of expanded positive patient ID system use.)

Her institution uses a number of logic/consistency rules. Among them:

- Is the total bilirubin greater than the direct bilirubin? “It shouldn’t be,” she said.

- Comparing I index to the total protein concentration. “You can identify interferences caused by paraproteins and other inconsistent results for further troubleshooting.”

- Anion gap.

- Metric of calcium times phosphorus.

- Metric of sodium divided by chloride.

At Mayo Clinic, Dr. Block said, “Our laboratory also uses what I would classify as process check rules. We’ll hold results where a sample treatment step such as polyethylene glycol precipitation or filtration was supposed to be performed, because in our highly automated and high-volume workflow, ensuring that manual processes such as these are performed prior to result release has the potential to be overlooked.

“So we choose to hold these results,” she continued, “and then verify that that processing was actually performed and final results are consistent with expected values,” versus autoverifying the results.

Fig. 1. Customizing hemolysis thresholds using analyte-specific middleware rules

Several other scenarios are especially ripe for machine learning and similar types of logic, she suggests. Among them: identifying IV fluid contamination from samples drawn from peripheral and central lines while medications are co-administered, EDTA contamination caused by order of draw errors, and pseudohyperkalemia caused by reasons other than hemolysis.

The general approach at Mayo, Dr. Block said, focuses on holding results where the technologist adds value to the work in process. “If we’re just holding things to hold,” she said, “it’s not helpful and possibly just delaying results.”The central clinical laboratory is Mayo Clinic’s core lab, which tests some 8,000 specimens daily in chemistry, immunoassay, coagulation, and hematology. It includes three analytic lines that perform chemistry and immunoassay testing, as well as connected hematology automation. “We recently replaced aging Roche modular preanalytics with the Cobas Connection Modules. We have three p 612, p 471 modules that will centrifuge [and] do some limited aliquoting and sorting of specimens to these different destinations,” she said. The lab also has two p 701 refrigerated storage units that can store chemistry samples for the seven-day retention time.

Staff describe the lab as fast-paced, she said, and little wonder. The heaviest workflow occurs between 7 AM and 2 PM, Monday through Friday. Based on her own quick calculations, the lab delivers one result per second over a 24-hour period—but in reality the pace is much more intense.

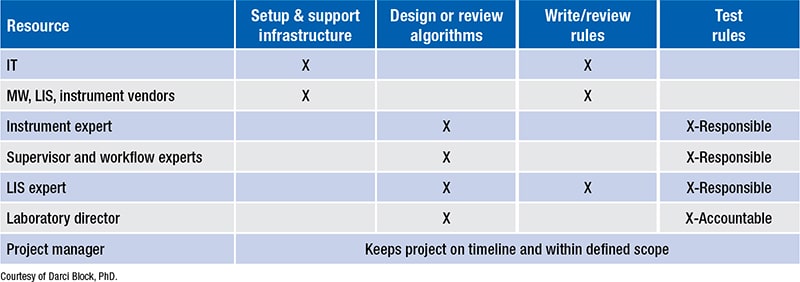

Fig. 2. Middleware support plan

Go back to basics CLSI Auto15

The lab uses automated serum indices to assess specimen integrity. The middleware applies either the manufacturer’s limits or lab-defined thresholds. Serum indices are performed for all specimens, “and then we’ve customized our rules and programmed them into the middleware to hold only results where the serum index threshold is exceeded,” she said. If a threshold is exceeded, that result is not reported and a redraw request is submitted.

She offered the example of hemolyzed specimens. Middleware rules are programmed with analyte-specific thresholds. On a basic metabolic panel, if the potassium was identified as having hemolysis above threshold, this specific result would be held, while the other analytes would be autoverified and reported. The potassium would be re-collected with a potassium order. “With any luck, that sample will not be hemolyzed,” and the lab can report the result.

The lab performed experiments to extend the hemolysis threshold for additional more-sensitive analytes, including direct bilirubin, as well as AST and troponin (Fig. 1). “We derive these rules based on measured concentrations to reduce our rejection rates,” she explained. For example, previous thresholds led to too many needless re-collections for AST testing. “Hemolysis causes a falsely elevated result; therefore as AST concentration increases, the proportion that is falsely increased diminishes. So we were able to extend the H-index limit as the AST concentration went up,” Dr. Block said.

CAP TODAY Pathology/Laboratory Medicine/Laboratory Management

CAP TODAY Pathology/Laboratory Medicine/Laboratory Management